Revisiting, Benchmarking and Exploring API Recommendation: How Far Are We?

Paper Information

Paper Name: Revisiting, Benchmarking and Exploring API Recommendation: How Far Are We?

Journal: IEEE Transactions on Software Engineering

Authors: Yun Peng, Shuqing Li, Wenwei Gu, Yichen Li, Wenxuan Wang, Cuiyun Gao, and Michael Lyu

Abstract

Application Programming Interfaces (APIs), which encapsulate the implementation of specific functions as interfaces, greatly improve the efficiency of modern software development. As the number of APIs grows up fast nowadays, developers can hardly be familiar with all the APIs and usually need to search for appropriate APIs for usage. So lots of efforts have been devoted to improving the API recommendation task. However, it has been increasingly difficult to gauge the performance of new models due to the lack of a uniform definition of the task and a standardized benchmark. For example, some studies regard the task as a code completion problem, while others recommend relative APIs given natural language queries. To reduce the challenges and better facilitate future research, in this paper, we revisit the API recommendation task and aim at benchmarking the approaches. Specifically, the paper groups the approaches into two categories according to the task definition, i.e., query-based API recommendation and code-based API recommendation. We study 11 recently-proposed approaches along with 4 widely-used IDEs. One benchmark named APIBench is then built for the two respective categories of approaches. Based on APIBench, we distill some actionable insights and challenges for API recommendation. We also achieve some implications and directions for improving the performance of recommending APIs, including appropriate query reformulation, data source selection, low resource setting, user-defined APIs, and query-based API recommendation with usage patterns.

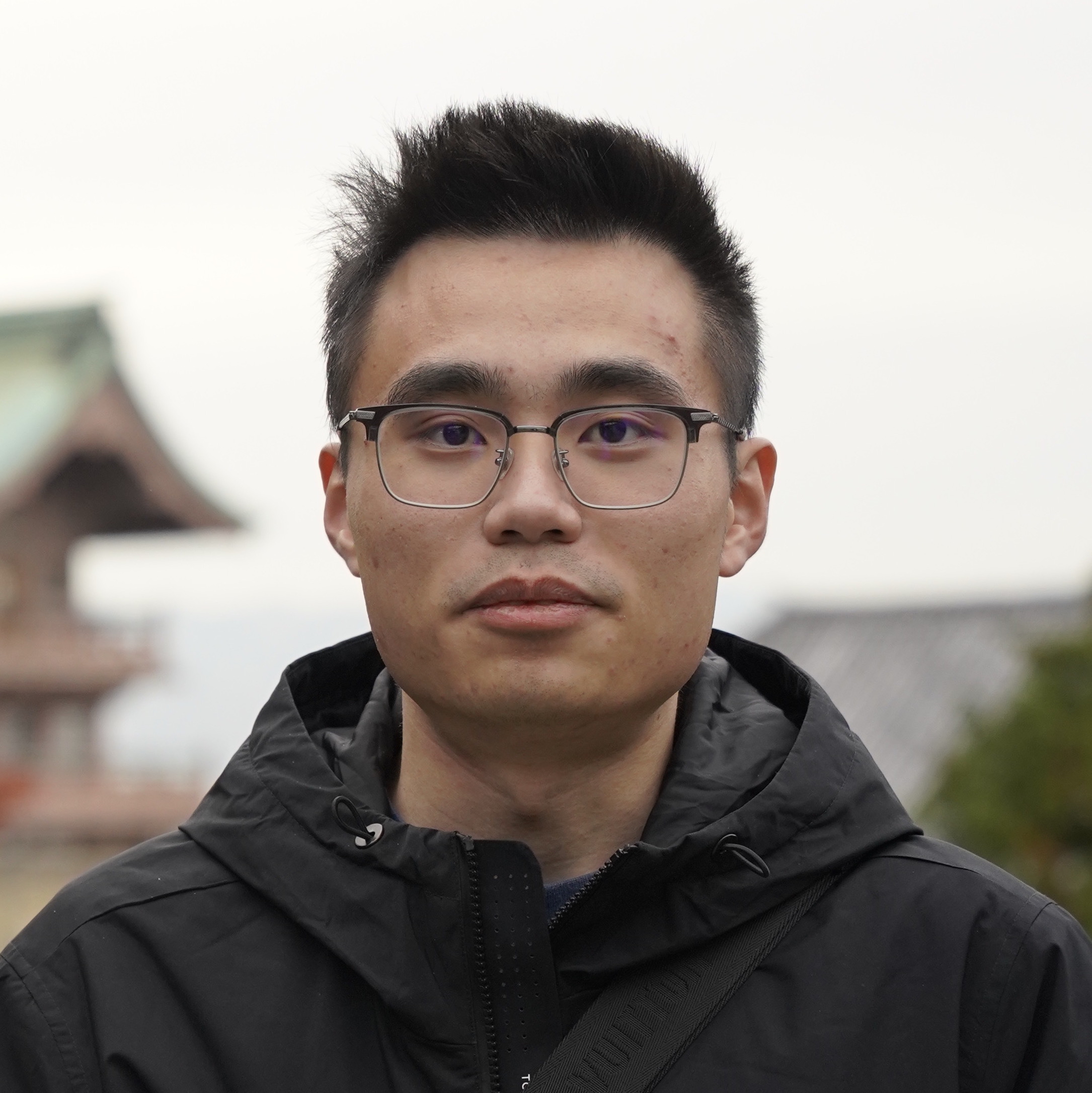

Workflow of API recommendation

Findings

- Existing approaches fail to predict 57.8% method-level APIs that could be successfully predicted at the class level. The performance achieved by the approaches is far from the requirement of practical usage. Accurately recommending the method-level APIs still remains a great challenge.

- Learning-based methods do not necessarily outperform retrieval-based methods in recommending more correct APIs. The insufficient query-API pairs for training limit the performance of learning-based methods.

- Current approaches cannot rank the correct APIs well, considering the huge gap between the scores of Success Rate and the other ranking metrics.

- Query reformulation techniques are quite effective in helping query-based API recommendation approaches give the correct API by adding an average boost of 27.7% and 49.2% on class-level and method-level recommendations, respectively.

- Query expansion is more stable and effective to help current query-based API recommendation approaches give correct APIs than query modification.

- In query expansion, adding predicted API class names or relevant words to queries are more useful than adding other tokens.

- BERT-based data augmentation shows superior performance in query modification compared with other query modification techniques.

- Expanding queries or modifying queries with appropriate data augmentation methods can improve the ranking performance of the query-based API recommendation techniques.

- Original queries raised by users usually contain noisy words which can bias the recommendation results, and query reformulation techniques should consider involving noisy-word deletion for a more accurate recommendation.

- Apart from official documentation, using other data sources such as Stack Overflow can significantly improve the performance of query-based API recommendation approaches.

- DL models such as TravTrans show superior performance on code-based API recommendation by achieving a Success Rate@10 of 0.62, while widely-used IDEs also obtain satisfying performance by achieving a Success Rate@10 of 0.5 ~ 0.6.

- Although current approaches achieve good performance on recommending standard and popular third-party libraries, they face the challenges of correctly predicting the user-defined APIs.

- Context length can impact the performance of current approaches in API recommendation. The approaches perform poorly for the functions with extremely short or long lengths, and accurate recommendation for the extremely short functions is more challenging.

- The location of recommendation points can affect the performance of current approaches. Current approaches perform worst at front recommendation points due to limited contexts. Some of them also suffer from overwhelming contexts at back recommendation point.

- Current approaches using fine-grained context representation are sensitive to the domain of the training data and suffer from performance drops when recommending cross-domain APIs.

- Training on multiple domains helps the current approaches to recommend APIs in different single domains, and the performance is generally

better than only training on a single domain.

Benchmark

APIBench contains two benchmarks: APIBench-Q for query-based API recommendation approaches and APIBench-C for code-based API recommendation approaches.

APIBench has instances in Python and Java.

APIBench-Q:

It contains more than 10,000 original queries from StackOverflow and tutorial websites. It also contains more than 100,000 reformulated queries generated by different data augmentation approaches according to original queries.

APIBench-C:

It contains more than 2,000 GitHub projects in Python and Java. All projects are divided into five domains.